“And I’m hovering like a fly, waiting for the windshield on the freeway.” A well-known part of the lyrics of the song “Fly on a Windshield” by Genesis. I love listening to this song, especially the guitar part right after singing this line, emphasizing the windshield hitting the fly. However, everybody driving a car on a hot summer day is familiar with the experience. Squashed bugs on your windshield. Whether you like it or not, it is inevitable and becomes annoying.

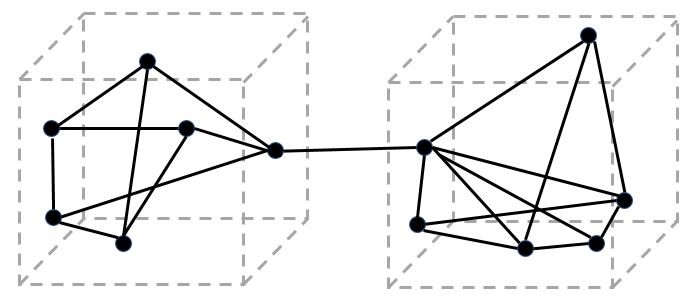

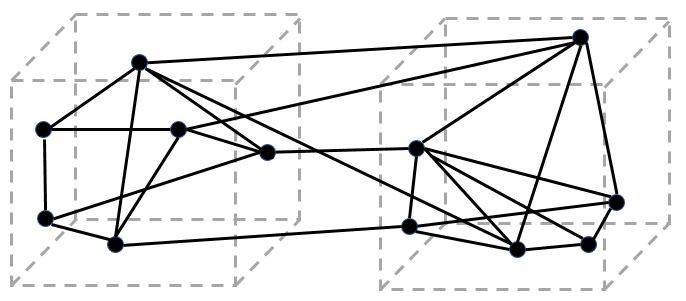

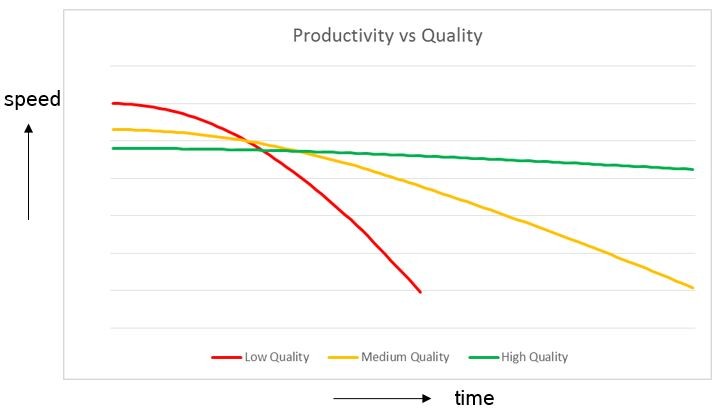

These squashed bugs on your windshield may be compared with technical debt in software development. Both ‘creep’ in and it is inevitable. Bug by bug will be squashed, piece by piece a little bit of code will be unnecessary complex. Unnecessary dependency by unnecessary dependency will be inserted in your software. Compiler warning by compiler warning will not be solved. All of these representing a little bit of technical debt. If you look at these squashed bugs individually they are not a problem unless when it is a large bug squashed right in your field of view. However, in case no corrective actions are taken, like using your wipers to clean the windshield, the amount of squashed bugs will become annoying. Many squashed bugs, even small ones, will become annoying due to the quantity of them. The same holds for technical debt in your software, a few imperfections in the code will not hamper you, however many of them will.

Many squashed bugs, even small ones, will become annoying due to the quantity of them.

It is not alone the squashed bug on your windshield, important as well is the location on the windshield where the bug is squashed. If you have a squashed bug somewhere at the edges of your windshield, who cares? However, sometimes I experience this squashed bug just in front of me, in the middle of my sight. Very annoying. The same applies to technical debt. Take a function with a too high cyclomatic complexity as an example. If this is a function which, so far, did not show any problems and did pass all testing successfully and it is not about to be changed, who cares? Just leave it as is, like the squashed bug at the edge of your windshield. However, if we have this function with a too high cyclomatic complexity and we need to apply a change to it due to whatever reason, you will suffer from its complexity like you suffer from this squashed bug in your sight.

And then you have small and big bugs. Small squashed bugs causing only a small spot on the windshield whilst big bugs cause big spots on the windshield. Like in software development, small imperfections in code can be handled by the ‘boy-scout-rule’ which states that you should leave the code more clean than how you encountered it. A small effort to remove a small imperfection in the code. It is like using your windshield wiper to wipe away the small squashed bugs. However, bigger imperfections may need specific restructuring which needs to be prioritized and planned like the large squashed bugs which cannot be removed by using your wiper. Extensive cleaning beyond wiper usage might be needed.

In 1992, Ward Cunningham made a comparison between technical imperfections and debt (like in finance). The accumulation of technical imperfections in your software is like debt in finance. You will have to pay interest by the effort needed to work with higher complex software due to the accumulation of imperfections and you can pay repayment to get rid of some debt like restructuring of refactoring your code. When you are driving on the highway this summer, and a bug gets squashed against your windshield, think about your technical debt in your software.